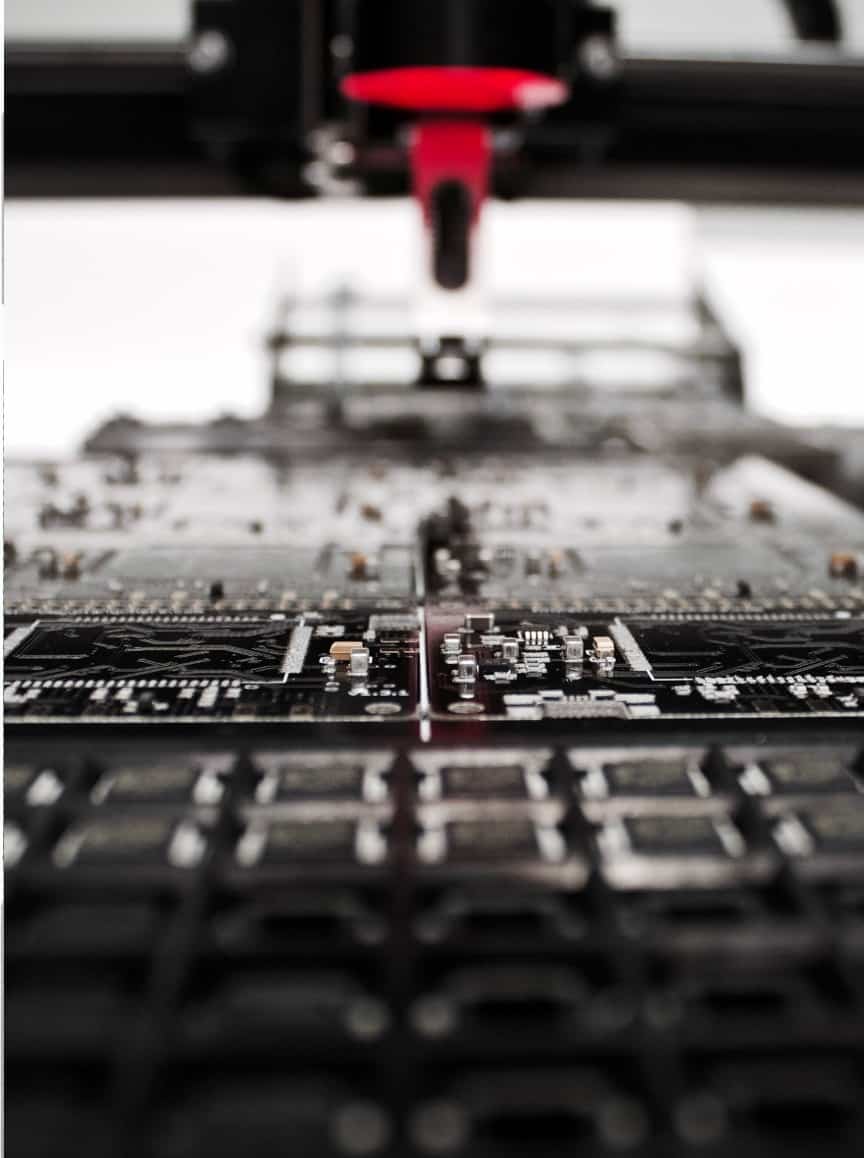

Industry solutions

DigitalGate offers customized algorithm development solutions for resource-constrained embedded systems, ensuring optimal performance. Our flexible solutions allow for easy porting to new hardware platforms, enabling adaptation to changing market needs and industry standards.

We have deep expertise in implementing algorithms along the entire processing pipeline, from low-level data filtering and enhancement up to high-level data fusion and dynamic modeling of systems.

We implement low-level filtering algorithms on embedded platforms to support high-level algorithms, providing fast and efficient APIs for signal filtering, denoising, and feature detection.

We employ various image clustering techniques like K-Means, Mean Shift, DB Scan, and Hierarchical clustering to enhance data processing for higher-level algorithms.

We develop low-level feature detectors for signals, including 1D, 2D, and 3D data like images and point clouds. Our algorithms support various features such as HOG, SIFT, Viola-Jones, corners, and edges. These are optimized for real-time embedded applications on resource-constrained platforms.

We create image clustering algorithms using techniques like K-Means, Mean Shift, DB Scan, and Hierarchical clustering to group similar images. This enhances the reliability of higher-level data processing.

We develop low-level computer vision systems to acquire, stream, and process images while adhering to strict application requirements and hardware limitations, especially on low-power platforms.

We create object tracking algorithms that enhance detection accuracy, handling occlusions, missing detections, and false positives. Our solutions support both 2D and 3D object tracking using advanced models like feature-based, optical flow-based, 3D estimators, and dynamic models within a Bayesian framework.

We support object detection algorithm development by providing tools for simulation, data labeling, and training. This facilitates the implementation and training of deep learning algorithms on custom sensor data, ensuring successful deployment on embedded platforms.

We implement signal processing algorithms for MEMS sensors like accelerometers and gyroscopes, enabling motion detection features for embedded systems. These features include shock detection, motion/movement detection, and gesture control, all optimized for custom embedded platforms with limited resources and low power consumption requirements.

We develop camera calibration algorithms for computing intrinsic and extrinsic parameters of vision systems. Our methods include pattern-based and feature-based calibration, both online and offline, as well as stereo and camera rig calibration for applications like panoramic image stitching.

Our team implements high-level data fusion algorithms for automotive applications. We handle data acquisition, implement interfaces, and develop estimators for object tracking and 3D models, providing fused data for further processing.

Find custom solutions compliant of the highest industry standards, tailored to the specifications of each unique project. We work on various embedded software and hardware services across numerous industries!